Last year, I noticed a tweet from The European Cockpit Association (ECA), on EU flight time limitations (Commission Regulation (EU) 83/2014, applicable from 18 February 2016). The FTLs have been controversial since their inception. The ECA’s ‘Dead Tired‘ campaign website lists a number of stories from 2012-13, often concerning the scientific integrity of the proposals, and goal conflicts between working conditions and passenger safety versus commercial considerations. Consecutive disruptive schedules, night-time operations and inadequate standby rules have been highlighted as problems by the ECA. Didier Moraine, an ECA FTL expert, stated that “basic compliance with EASA FTL rules does not necessarily ensure safe rosters. They may actually build unsafe rosters.”

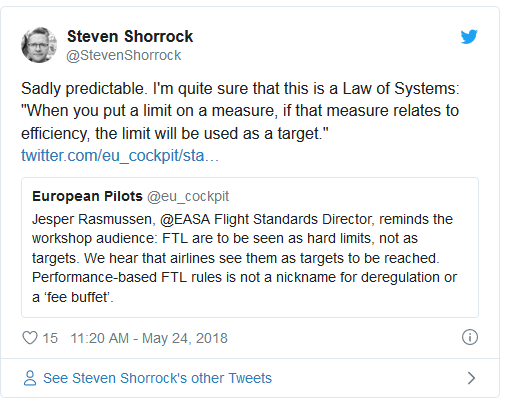

In May 2018, the ECA twitter account reported that EASA’s Flight Standards Director Jesper Rasmussen reminded a workshop audience that FTLs are to be seen as hard limits, not as targets.

A February 2019 study published by the European Union Aviation Safety Agency (EASA) found that that prescriptive limits alone are not sufficient to prevent high fatigue during night flights.

“When you put a limit on a measure, if that measure relates to efficiency, the limit will be used as a target.”

This relates to Goodhart’s Law, expressed succinctly by anthropologist Marilyn Strathern as follows: “When a measure becomes a target, it ceases to be a good measure.” It also relates to The Law of Stretched Systems, expressed as follows by David Woods: “Every system is stretched to operate at its capacity; as soon as there is some improvement, for example in the form of new technology, it will be exploited to achieve a new intensity and tempo of activity.” Woods also notes that this law “captures the co-adaptive dynamic that human leaders under pressure for higher and more efficient levels of performance will exploit new capabilities to demand more complex forms of work.” But this particular aspect of system behaviour concerning limits, simple as it is, is not quite expressed by either.

An everyday example of the Law of Limits can be found in driving. As in most countries, British roads have speed limits, depending on the road type. In 2015, on 30 mph speed limit roads, the average free flow speed at which drivers choose to travel as observed at sampled automatic traffic counter (ATC) locations was 31 mph for cars and light goods vehicles. (The figure was 30 mph for rigid and articulated heavy goods vehicles [HGVs], and 28 mph for buses.) In the same year, on motorways with a 70 mph speed limit for cars and light goods vehicles, the average speed was 68 mph for cars and 69 mph for light goods vehicles. Most drivers will be familiar with the activity of driving as close to the limit as possible. Many things contribute to this, primarily a drive for efficiency coupled with a fear of consequences of exceeding the limit. Many more examples can be found in everyday life, where limits relating to any measure are imposed, and treated as targets when efficiency gains can be made.

The following is a post on Medium by David Manheim, a researcher and catastrophist focusing on risk analysis and decision theory, including existential risk mitigation, computational modelling, and epidemiology. It is reproduced here with kind permission.

Shorrock’s Law of Limits

Written by David Manheim, 25 May 2018

I recently saw an interesting new insight into the dynamics of over-optimization failures stated by Steven Shorrock: “When you put a limit on a measure, if that measure relates to efficiency, the limit will be used as a target.” This seems to be a combination of several dynamics that can co-occur in at least a couple of ways, and despite my extensive earlier discussion of related issues, I think it’s worth laying out these dynamics along with a few examples to illustrate them.

First, there is a general fact about constrained optimization that, in simple terms, says that for certain types of systems the best solution to a problem is going to involve hitting one of the limits. This was formally shown in a lemma by Dantzig about the simplex method, where for any convex function the maximum must lie at an extreme point in the space. (Convexity is important, but we’ll get back to it later.)

When a regulator imposes a limit on a system, it’s usually because they see a problem with exceeding that limit. If the limit is a binding constraint — that is, if you limit something critical to the process, and require a lower level of the metric than is currently being produced, the best response is to hug the limit as closely as possible. If we limit how many hours a pilot can fly (the initial prompt for Shorrock’s law), or that a trucker can drive, the best way to comply with the limit is to get as close to the limit as possible, which minimizes how much it impacts overall efficiency.

There are often good reasons not to track a given metric, when it is unclear how to measure it, or when it is expensive to measure. A large part of the reason that companies don’t optimize for certain factors is because they aren’t tracked. What isn’t measured isn’t managed — but once there is a legal requirement to measure it, it’s much cheaper to start using that data to manage it. The companies now have something they must track, and once they are tracking hours, it would be wasteful not to also optimize for them.

Even when the limit is only sometimes reached in practice before the regulation is put in place, formalizing the metric and the limitation means that it becomes more explicit — leading to reification of the metric. This isn’t only because of the newly required cost of tracking the metric, it’s also because what used to be a difficult to conceptualize factor like “tiredness” now has a newly available albeit imperfect metric.

Lastly, there is the motivation to cheat. Before fuel efficiency standards, there was no incentive for companies to explicitly target the metric. Once the limit was put into place, companies needed to pay attention — and paying attention to a specific feature means that decisions are made with this new factor in mind. The newly reified metric gets gamed, and suddenly there is a ton of money at stake. And sometimes the easiest way to perform better is to cheat.

So there are a lot of reasons that regulators should worry about creating targets, and ignoring second-order effects caused by these rules is naive at best. If we expect the benefits to just exceed the costs, we should adjust those expectations sharply downward, and if we haven’t given fairly concrete and explicit consideration to how the rule will be gamed, we should expect to be unpleasantly surprised. That doesn’t imply that metrics can’t improve things, and it doesn’t even imply that regulations aren’t often justifiable. But it does mean that the burden of proof for justifying new regulation needs to be higher that we might previously have assumed.