Me, myself and TMI

Imagine you are an operator in a nuclear power control room. An accident has started to unfold. During the first few minutes, more than 100 alarms go off, and there is no system for suppressing the unimportant signals so that you can concentrate on the significant alarms. Information is not presented clearly; for example, although the pressure and temperature within the reactor coolant system are shown, there is no direct indication that the combination of pressure and temperature mean that the cooling water is turning into steam. There are over 50 alarms lit in the control room, and the computer printer registering alarms is running more than 2 hours behind the events.

This was the basic scenario facing the control room operators during the Three Mile Island (TMI) partial nuclear meltdown in 1979. The Report of the President’s Commission stated that, “Overall, little attention had been paid to the interaction between human beings and machines under the rapidly changing and confusing circumstances of an accident” (p. 11). The TMI control room operator on the day, Craig Faust, recalled for the Commission his reaction to the incessant alarms: “I would have liked to have thrown away the alarm panel. It wasn’t giving us any useful information”. It was the first major illustration of the alarm problem, and the accident triggered a flurry of human factors/ergonomics (HF/E) activity.

But the problem was never solved. A familiar pattern has resurfaced many times in the 35 years post-TMI in several industries, from oil and gas (Texaco Milford Haven, 1994) to aviation (Qantas Flight 32, 2010). And now, WebOps is starting to see similar patterns emerging, as e-commerce experiences its own ‘disasters’. In web engineering and operations, things don’t explode, leak, melt down, crash, derail, or sink in the same sense that they do in nuclear power or transportation, but the business implications of a loss of availability of systems that support the stock market or major e-commerce sites are enormous. As Allspaw (in press) noted, “outages or degraded performance can occur which affect business continuity at a cost of millions of dollars“.

In such events, the alarm scenario is likely to be highly variable between companies, because alarm design is not subject to the same kind of formal process as is more commonplace in (other) safety-critical industries, which often have alarm philosophies and design processes and criteria. Unlike system users in the nuclear and aviation domains, in web engineering and operations, the users are also designers (Allspaw, in press); they can design their own alarms. This is a double edged sword. As systems become increasingly automated and complicated, the number of monitoring points and associated alarms tends to proliferate. And ‘common sense’ design solutions often spell trouble. At this point, it’s worth going back to basics.

Alarm design 101

First, what are alarms for? The purpose of alarms is to direct the user’s attention towards significant aspects of the operation or equipment that require timely attention. This is important to keep in mind. Second, what does a good alarm look like? The Engineering Equipment and Materials Users Association (EEMUA) (1999) summarise the characteristics of a good alarm as follows:

- Relevant – not spurious or of low operational value.

- Unique – not duplicating another alarm.

- Timely – not long before any response is required or too late to do anything.

- Prioritised – indicating the importance that the operator deals with the problem.

- Understandable – having a message that is clear and easy to understand.

- Diagnostic – identifying the problem that has occurred.

- Advisory – indicative of the action to be taken.

- Focusing – drawing attention to the most important issues.

These characteristics are not always evident in alarm systems. Even when individual alarms may seem ‘well-designed’ they may not work in the context of the system as a whole and the users’ activities.

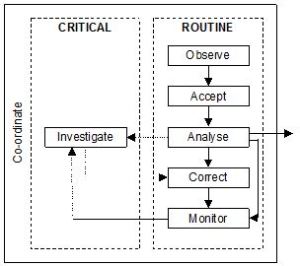

This post raises a number of questions for consideration in the design of alarm systems, framed in a model of alarm-handling activity. The questions may help in the development of an alarm philosophy (one of the first steps in alarm management), or in discussion of an existing system. The principles were originally derived from evaluations of two different control and monitoring systems for two ATC centres (see Shorrock and Scaife, 2001; Shorrock et al, 2002, p178); environments which share much in common with web engineering and operations. The resultant principles are included in this article as questions for consideration for readers as designers and co-designers, structured around seven alarm-handling activities (Observe, Accept, Analyse, Investigate, Correct, Monitor, and Co-ordinate). This is illustrated and outlined below. In reality, alarm handling is not so linear; there are several feedback loops. But the general activities work for a discussion of some of the detailed design implications.

Model of alarm initiated activities (adapted from Stanton, 1994).

Design questions are raised for each stage of alarm handling. The questions may be useful in discussions involving users, designers and other relevant stakeholders. They may help to inform an alarm philosophy or an informal exploration of an alarm system from the viewpoint of user activity. In most cases, the questions are applicable primarily in one stage of alarm handling, but also have a bearing on other stages, depending on the system in question. It should be possible to answer ‘Yes’ to most questions that are applicable, but a discussion around the questions is a useful exercise, and may prompt the need for more HF/E design expertise.

Observation

Observation is the detection of an abnormal condition or state within the system (i.e., a raised alarm). At this stage, care must be taken to ensure that coding methods (colour and flash/blink, in particular) support alarm monitoring and searching. Excessive use of highly saturated colours and blinking can de-sensitise the user and reduce the attention-getting value of alarms. Any use of auditory alarms should further support observation without causing frustration due to the need to accept alarms in order to silence the auditory alert, which can change the ‘alarm handling’ task to an ‘alarm silencing’ task.

1. Is the purpose and relevance of each alarm clear to the user?

2. Do alarms signal the need for action?

3. Are alarms presented in chronological order, and recorded in a log (e.g. time stamped) in the same order?

4. Are alarms relevant and worthy of attention in all the operating conditions and equipment states?

5. Can alarms be detected rapidly in all operating (including environmental) conditions?

6. Is it possible to distinguish alarms immediately (i.e., different alarms, different operators, alarm priority)?

7. Is the rate at which alarm lists are populated manageable by the user(s)?

8. Do auditory alarms contain enough information for observation and initial analysis, and no more?

9. Are alarms designed to avoid annoyance or startle?

10. Does an indication of the alarm remain until the user is aware of the condition?

11. Does the user have control over automatically updated information, so that information important to them at any specific time does not disappear from view?

12. Is it possible to switch off an auditory alarm independent of acceptance, while ensuring that it repeats after an appropriate period if the problem is not resolved?

13. Is failure of an element of the alarm system made obvious to the

user?

Acceptance

Acceptance is the act of acknowledging the receipt and awareness of an alarm. At this stage, user acceptance should be reflected in other elements of the system that are providing alarm information. Alarm systems should aim to reduce user workload to manageable levels; excessive demands for acknowledgement increase workload and unwanted interactions. For instance, careful consideration is required to determine whether cleared alarms really need to be acknowledged. Group acknowledgement of several alarms (e.g. via using click-and-drag or a Shift key) may lead to unrelated alarms being masked in a block of related alarms. Single acknowledgement of each alarm, however, can increase workload and frustration, and an efficiency-thoroughness trade-off can lead to alarms being acknowledged unintentionally as task demands increase. It can be preferable be to allow acknowledgement of alarms for the same system.

14. Has the number of alarms that require acceptance been reduced as far as is practicable?

15. Is multiple selection of alarm entries in alarm lists designed to avoid unintended selection?

16. Is it possible to view the first unaccepted alarm with a minimum of action?

17. In multi-user systems, is only one user able to accept and/or clear alarms displayed at multiple workstations?

18. Is it only possible to accept an alarm from where sufficient alarm information is available?

19. Is it possible to accept alarms with a minimum of action (e.g., double click), from the alarm list or mimic?

20. Is alarm acceptance reflected by a change on the visual display (e.g. visual marker and the cancellation of attention-getting mechanisms), which prevails until the system state changes?

Analysis

Analysis is the assessment of the alarm within the task and system context, leading to the prioritisation of that alarm. Alarm lists can be problematic, but, if properly designed, they can support the user’s preference for serial fault or issue management. Effective prioritisation of alarm list entries can help users at this stage. Single ‘all alarm’ lists can make it difficult to handle alarms by shifting the processing debt to the user. However, a limited number of separate alarm lists (e.g., by system, function, priority, acknowledgement, etc.) can help users to decide whether to ignore, monitor, correct or investigate the alarm.

21. Does alarm presentation, including conspicuity, reflect alarm priority with respect to the severity of consequences of delay in recognising the problem?

22. When the number of alarms is large, is there a means to filter the alarm display by appropriate means (e.g. sub-system or priority)?

23. Are users able to suppress or shelve certain alarms according to system mode and state, and see which alarms have been suppressed or shelved? Are there means to document the reason for suppression or shelving?

24. Are users prevented from changing alarm priorities?

25. Does the highest priority signal always over-ride, automatically?

26. Is the coding strategy (colour, shape, blinking/flashing, etc) the same for all display elements?

27. Are users given the means to recall the position of a particular alarm (e.g. periodic divider lines)?

28. Is alarm information (terms, abbreviations, message structure, etc) familiar to users and consistent when applied to alarm lists, mimics and message/event logs?

29. Is the number of coding techniques at the required minimum? (Dual coding [e.g., symbols and colours] may be needed to indicate alarm status and improve analysis.)

30. Can alarm information be read easily from the normal operating position?

Investigation

Investigation is any activity that aims to discover the underlying factors order to deal with the fault or problem. At this stage, system schematics or other such diagrams can be helpful. Coding techniques (e.g., group, colour, shape) again need to be considered fully to ensure that they support this stage without detracting from their usefulness elsewhere. Displays of system performance need to be designed carefully in terms of information presentation, ease of update, etc.

31. Is relevant information (e.g. operational status, equipment setting and reference) available with a minimum of action?

32. Is information on the likely cause of an alarm available?

33. Is a usable graphical display concerning a displayed alarm available with a single action?

34. When multiple display elements are used, are individual elements visible (not obscured)?

35. Are visual mimics spatially and logically arranged to reflect functional or naturally occurring relationships?

36. Is navigation between screens, windows, etc, quick and easy, requiring a minimum of user action?

Correction

Correction is the application of the results of the previous stages to address the problem(s) identified by the alarm(s). At this stage, the HMI must allow timely and error-tolerant command entry, if the fault can be fixed remotely. For instance, any command windows should be easily called-up, user memory demands for commands should be minimised, help or instructions should be clear, upper and lower case characters should be treated equivalently, and positive feedback should be presented to show command acceptance.

37. Does every alarm have a defined response and provide guidance or indication of what response is required?

38. If two alarms for the same system have the same response, has consideration been be given to grouping them?

39. Is it possible to view status information during fault correction?

40. Are cautions used for operations that might have detrimental effects?

41. Is alarm clearance indicated on the visual display, both for accepted and unaccepted alarms?

42. Are local controls positioned within reach of the normal operating position?

Monitoring

Monitoring is the assessment of the outcome of the Correction stage. At this stage, the HMI (including schematics, alarm clears, performance data and message/event logs) needs to be designed to reduce memory demand and the possibility of interpretation problems (e.g., the ‘confirmation bias’).

43. Is the outcome of the Correction stage clear to the user? (A number of questions primarily associated with observation become relevant to monitoring.)

Co-ordination

Co-ordination between operators is required to work collaboratively. This may involve delegating authority for specific issues to colleagues, or co-ordinating efforts for problems that permeate several different parts of the overall system.

44. Are shared displays available to show the location of operators in system, areas of responsibility, etc?

Alarm design and you

The trouble with alarm design is that it seems obvious. It’s not. Control rooms around the world are littered with alarms designed via ‘common sense’, which do not meet users’ needs. Even alarms designed by you for you may not meet your needs, or others’ needs. There are many reasons for this. For example:

- We are not always sure what we need. Our needs can be hard to access and they can conflict with other needs and wants. What we might want, such as a spectrum of colours for all possible systems states, is not what we need in order to see and understand what’s going on.

- We find it hard to understand and reconcile the various needs of different people in the system. There is an old joke among air traffic controllers that if you ask 10 controllers you will get 10 different answers…minimum. Going up and our to other stakeholders, the variety amplifies.

- We find it hard to understand system behaviour, including its boundaries, cascades and hidden interactions. We all tend to define system boundaries, priorities and interactions from a particular perspective, typically our our own.

- We are not all natural designers. Many alarm systems show a lack of understanding of coding (colour, shape, size, rate…), control, chunking, consistency, constancy, constraints, and compatibility…and that’s just the c’s! What looks good is not necessarily what works good…

This is where the delicate interplay of design thinking, systems thinking and humanistic thinking come into play. Understanding the nature of alarm handling, and the associated design issues, can help you – the expert in your work – to be a more informed user, helping to bring about the best alarm systems to support your work.

References

Allspaw, J. (2016). HF/E Practice in Web Engineering and Operations. In Shorrock, S.T. and Williams, C.A.W. (Eds). (in prep). Human Factors and Ergonomics in Practice. Ashgate.

Kemeny, J.G. (Chairman) (1979). President’s Commission on the Accident at Three Mile Island. Washington DC.

Shorrock, S.T., MacKendrick, H. and Hook, M., Cummings, C. and Lamoureux, T. (2001). The development of human factors guidelines with automation support. Proceedings of People in Control: An International Conference on Human Interfaces in Control Rooms, Cockpits and Command Centres, UMIST, Manchester, UK: 18 – 21 June 2001.

Shorrock, S.T. and Scaife, R. (2001). Evaluation of an alarm management system for an ATC Centre. In D. Harris (Ed.) Engineering Psychology and Cognitive Ergonomics: Volume Five – Aerospace and Transportation Systems. Aldershot: Ashgate, UK.

Shorrock, S.T., Scaife, R. and Cousins, A. (2002). Model-based principles for human-centred alarm systems from theory and practice. Proceedings of the 21st European Annual Conference on Human Decision Making and Control, 15th and 16th July 2002, The Senate Room, University of Glasgow.

Stanton, N. (1994). Alarm initiated activities. In N. Stanton (Ed.), Human Factors in Alarm Design. Taylor and Francis: London, pp. 93-118.

This post is adapted from an article in HindSight 22 on Safety Nets in December 2015. Some of the article is adapted from Shorrock et al, 2002. Many thanks to Daniel Schauenberg (@mrtazz) and Mathias Meyer (@travisci) for helpful comments on an earlier draft of this post.

What about EEMUA 191?

LikeLike

Never even knew PayPal was down for 2 hours – that’s huge news.

LikeLike