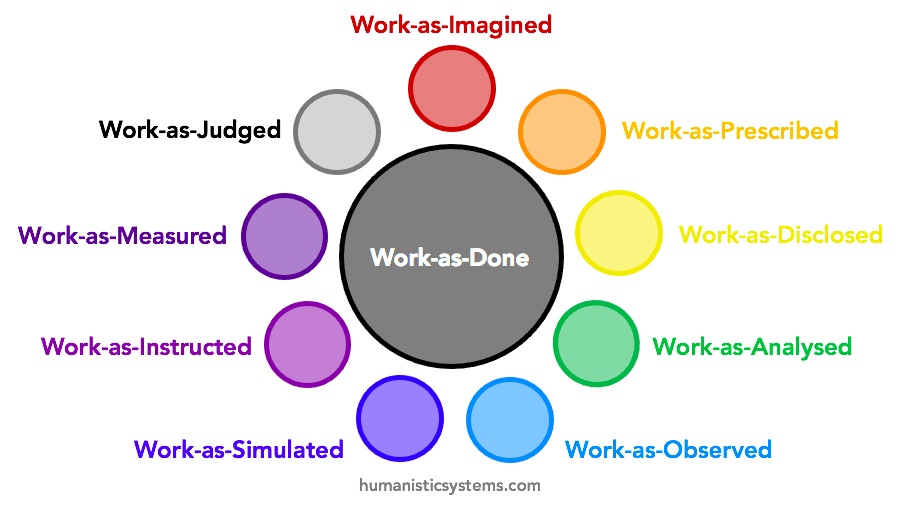

In any attempt to understand or intervene in the design and conduct of work, we can consider several kinds of ‘work’. We are not usually considering actual purposeful activity – work-as-done. Rather, we use ‘proxies’ for work-as-done as the basis for understanding and intervention. In this series of short posts, I outline briefly some of these proxies. (See here for a fuller introduction to the series.)

Other Posts in the Series

- Work-as-Imagined

- Work-as-Prescribed

- Work-as-Disclosed

- Work-as-Analysed

- Work-as-Observed

- Work-as-Simulated

- Work-as-Instructed

- Work-as-Measured (this post)

- Work-as-Judged

Work-as-Measured

Work-as-measured is the quantification or classification of aspects of work.

Function and Purpose: Work-as-measured is the quantification or classification of aspects of work. Work-as-measured is intended to help assess, understand, and evaluate work, with purposes relating to the selection, design and evaluation of workers, tools, procedures, working methods, working environments, and jobs, with respect to all aspects of effectiveness concerning work and system performance (e.g., productivity, efficiency, safety, quality, comfort, wellbeing).

Form: A common classification of measurement includes four levels, or scales, of measurement: nominal, ordinal, interval, and ratio (Stevens, 1975), though nominal data is sometimes disputed as representing ‘measurement’. Other typologies exist, for instance to include grades (e.g., beginner, intermediate, advanced). Measures are often characterised as ‘objective’ and ‘subjective’, depending on whether the measured attribute has verifiable existence in the external world, independent of any feeling, opinion, attitude, or judgment. However, subjectivity is present in the selection, collection, analysis, interpretation, and evaluation of measures and associated data.

Agency: Work may be measured by those in a variety of functions such as supervision and management, quality, safety, human factors and ergonomics, human performance, psychology, sociology, ethnography, kinesiology, occupational therapy, and engineering.

Variety: There is significant variation in work-as-measured, with many possibilities for measurement of outputs (e.g., motor output, natural language), subjective responses (e.g., from interviews, questionnaires), physical and physiological variables (e.g., muscle fatigue, joint strength, heart rate variability, eye movements). These data relate to many constructs (e.g., mental workload, situation awareness, vigilance, stress, teamwork, job satisfaction) and measures of work output (e.g., lane-keeping when driving, alarms identified in a control room, number of aircraft on frequency for an air traffic controller). Observation and self-report measures are popular in many disciplines and professions, while physiological measures are increasingly common in research and practice in some disciplines (e.g., electroencephalographic, electrocardiographic, electromyographic, skin conductance, respiratory, body fluid, ocular, and blood pressure). Variety in work-as-measured will be influenced strongly by the multiple interacting contexts of work (e.g., societal, legal and regulatory, organisational and institutional, social and cultural, personal, physical and environmental, temporal, informational, and technological). These can be in opposition. Changes in the technological context, for example, open up many possibilities for work-as-measured (e.g., via voice and video recording), but these may be resisted in light of the social and cultural context, or fall foul of the regulatory and legal context.

Stability: The stability of work-as-measured can vary significantly. Many measures of work score poorly when evaluated formally and independently in terms of reliability (consistency over time and consensus between measurements). The stability of work-as-measured may depend on, for example: who conducts the measurement and their interests and characteristics (e.g., incentives, knowledge, focus of attention, physiological state, attitudes, independence, and relationships); the timing and period of measurement (e.g., of the day, week, month, year; period of shift); where measures are taken (e.g., environmental conditions, field of view); how measures are taken (e.g., via self-report, observation, instrumentation); and why measures are taken (e.g., comparison with performance targets, consequences on job). For these reasons, even seemingly ‘objective’ measures, such as physical or output measures, can lack assumed stability.

Fidelity: Work-as-measured varies significantly in terms of fidelity (termed ‘validity’ in several disciplines). Work-as-measured should actually measure what it claims to measure, and not something else (construct validity). If a measure claims to measure mental workload, it should measure workload, not fatigue, for instance. Work-as-measured may have predictive value in terms of outcomes or future work-as-done (predictive validity), and any such claims should be validated. For instance, selection tests and human reliability assessments for visual inspection performance should predict task performance as measured by validated measures of visual inspection, including outcome measures. Similarly, work-as-measured may claim to account for past work-as-done (retrospective validity). An annual performance appraisal should reflect work-as-done over the relevant period. Work-as-measured may also correlate with related measures obtained for the same work-as-done (concurrent validity). For air traffic controllers, for instance, self-assessed workload ratings should correlate with established objective measures of workload (e.g., for traffic load and complexity). Measures derived from artificial settings should be valid in real-world conditions (ecological validity), if the measure is designed to be used in both environments, or if conclusions from one setting are assumed to transfer to another. An example is a self-report workload measure developed in a simulator environment, where the measure is intended for use in the real environment or where conclusions drawn from work-as-simulated are claimed to predict workload during work-as-done. Work-as-measured should generalise beyond the context of the development of the measure to the context of work-as-done (external validity). Participants involved in the development of physiological measures of performance should be representative of workers as a whole. For instance, measures based on research on experts may not apply to novices, and measures derived from men may not apply to women.

In practice, work-as-measured is often assumed to have more validity than it really does. It often does not measure what it claims to measure. Measures for aspects of work-as-done are also often overgeneralised, not evaluated properly in situ, or are affected by biases, especially when there are perceived or real adverse consequences for poor measures (work-as-judged). Many aspects of work-as-done (especially work in the head, coordination, social behaviour) cannot be measured with high levels of fidelity. Quantitative measures can – via precision bias and false precision – appear to be more valid than they really are. Finally, work-as-measured can be affected by perceived and actual consequences, such as those associated with incentives and punishments, sometimes associated with performance targets, and limits. As noted earlier, these may affect the selection, collection, analysis, interpretation, and evaluation of measures and associated data. A practical example is the four hour target in UK hospitals, where accident and emergency (A&E) departments in the UK must admit, transfer or discharge patents within four hours. This target has been been associated with reductions in recorded waiting times, but also peaks just before the four hour limit, and occasionally data falsification, without sustained improvements in patient care.

Completeness: It is not possible to measure all aspects of work all of the time. But work-as-measured should at least measure a representative sample of the work-as-done that is claimed to be represented (content validity). This is often a downfall of work-as-measured, which can focus on limited fragments of work and outcomes that are easy to measure, but do not reflect work in a holistic manner. Work-as-measured should be representative of the people and conditions in the real work-as-done environment. This can pose challenges for measures in laboratories or simulated environments, or with non-representative workers (e.g., ‘superusers’ on project teams with much higher competence with new technology than ordinary users).

Granularity: Work is measured at different levels of granularity, from coarse (e.g., performance appraisals, productivity measures) to fine (e.g., manual inputs, speech output, eye movements). The level of detail and flexibility will vary. No matter what the level of granularity, measurement tends to lack the richness and subtlety of actual work, including the many interdependencies and conditions.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. CC BY-NC-ND 4.0